Lighting

Lighting

Vision & Sensors

V&S

Learn how lighting can optimize multispectral imaging, collaborative robots, and deep learning applications. By Steve Kinney

Machine Vision Lighting

Powers Industrial Automation Advances

Within the machine vision space, an ebb and flow of buzzwords emerge at tradeshows or in the press every few years. While certain technologies have been perhaps overhyped or released without any real-world applications in mind, many others are successfully deployed on factory floors and in warehouses today.

For example, technologies such as multispectral imaging, collaborative robots (cobots), and — yes — artificial intelligence (AI) and deep learning, can boost efficiency, enhance productivity and output, improve quality, and help drive revenue. System success is contingent upon not only identifying the right application, but also understanding how the requisite components of a machine vision system work together. Lighting, for example, represents a crucial consideration when designing and specifying a system, as it is fundamental to image quality. In addition, lighting implementations vary greatly from application to application — more so than other system components — so understanding how lighting impacts the rest of the system lays the foundation for system success.

Robots on the Rise

Machine vision and industrial automation systems have never been more important than they are today; these systems remove people from repetitive and sometimes dangerous jobs while protecting businesses against disruptions, allowing them to remain competitive in the market. One year after the COVID-19 pandemic began, Association for Advancing Automation (A3) figures show that machine vision sales in North America reached $3.03 billion as companies looked for ways to remain productive in the face of COVID-related disruptions and labor issues.

In addition, A3 figures also show that robot sales in North America hit record highs in every quarter of 2022, reaching a total of 44,196 robots ordered and a record total $2.38 billion for the year — which represents increases of 11% and 18% respectively. Robotics growth areas last year included food services and agriculture, with applications ranging from robots cooking and serving food to harvesting and picking fruits and vegetables. Growth in the food industry carries over from 2021, when, according to International Federation of Robotics (IFR) figures, the food and beverage sector saw 25% more robots installed, reaching a peak of more than 3,400 robots deployed.

Automation systems in food and beverage represent just one example of a space where growing customer requirements push the need for evolving technologies. While inspecting food with machine vision technologies is commonplace today, newer applications involve the use of novel lighting methods for multispectral imaging, which unlocks new layers of information for difficult industrial imaging tasks.

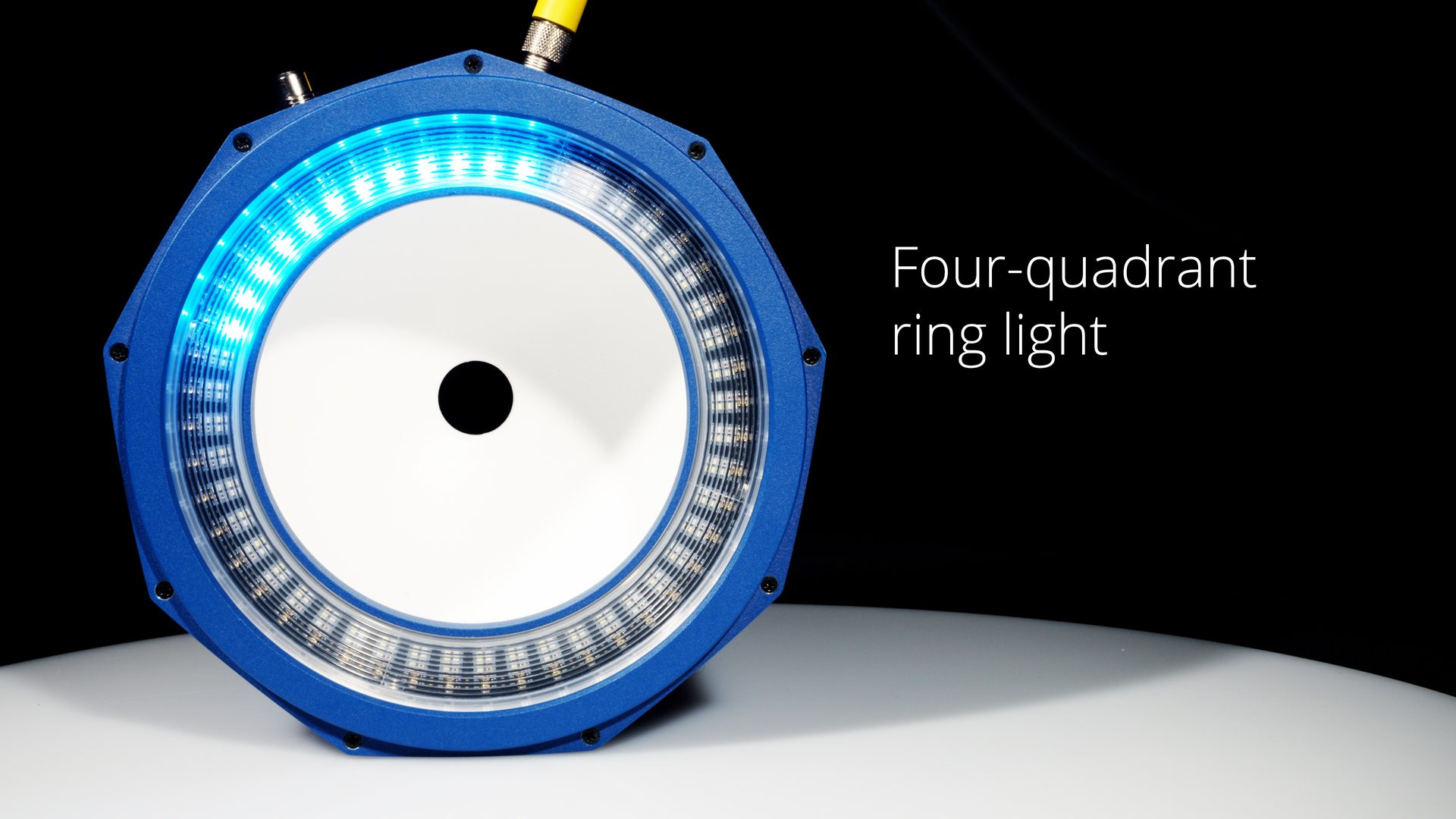

A compact, all-in-one lighting solution offering multiple lighting options – including RGBW and NIR and UV wavelength capabilities – saves on space, cost, and effort when deploying multispectral imaging systems. Source: Smart Vision Lights

An Alternative Multispectral Imaging Method

While hyperspectral imaging typically means narrow, usually contiguous spectral bands possibly involving hundreds or thousands of spectra, multispectral imaging involves spectral bands of varying bandwidths, not necessarily contiguous, but with up to 10 bands strategically selected. Examples of multispectral imaging applications include: Deploying near infrared (NIR) or shortwave infrared (SWIR) wavelength lights to identify rocks or other inorganic materials present in foods such as rice, beans, or grains; using a combination of color and NIR/SWIR lights to detect if fruit is ripe (color) or to spot bruises (SWIR/NIR); or using a multispectral lighting setup to analyze fat, muscle, and bone content in meat.

When it comes to deploying such imaging systems, two primary options exist: One involves fully integrated multispectral cameras, while the other is a simplified approach that uses a light source with independently controlled RGB lighting channels with a grayscale camera. Creating a color image with this simplified method, for example, involves the light source cycling through each individual color channel, capturing a subsequent image from each channel that corresponds to the object’s reflectance for red, green, or blue, and combining the three images into an RGB image.

Other features can be isolated from the rest of that RGB image based on how they respond to the lighting channels, which offers several benefits. Unlike standard color imaging where pixels in proximity are combined to resolve color, the image resolution resulting from a novel multispectral approach is the same as the full pixel resolution of the grayscale camera. In addition, filters become unnecessary, which leads to a lower cost, less complex multispectral imaging system.

Several lighting options exist in the market today for deploying a multispectral imaging system based on grayscale cameras, including linear, ring, bar, and brick formats covering white, UV, blue, cyan, green, red, infrared, SWIR, RGB, and RGBW lights appropriate for a range of different applications. Recently introduced novel lighting solutions that incorporate RGBW as well as NIR and UV wavelength capabilities, plus additional ports to connect auxiliary lights such as spot or linear lights — all in the same system — allow the imaging system to cover any spectrum. Deploying a solution with six lights integrated into one compact form factor saves space, costs, and effort.

Collaboration Requires Flexibility

Designed to work alongside humans “collaboratively,” cobots are becoming increasingly popular in the industrial automation space due to their affordable cost, ease of use, and flexibility, among other benefits. Market and consumer data company Statista estimates that cobot sales will increase from 353,330 units to 735,000 units in 2025, highlighting the rapid market growth in this robotics sector.

Because cobots have six and sometimes seven degrees of freedom (axes), and 360° of rotation on all wrist joints, these robot arms deliver a great deal of movement flexibility, opening a wide range of applications. These include machine tending, material handling, welding, dispensing, assembly, quality inspection, and many more. Once a manufacturer identifies a viable application for a cobot, integrating it with other machinery and equipment requires an experienced integrator that can navigate the highly technical process.

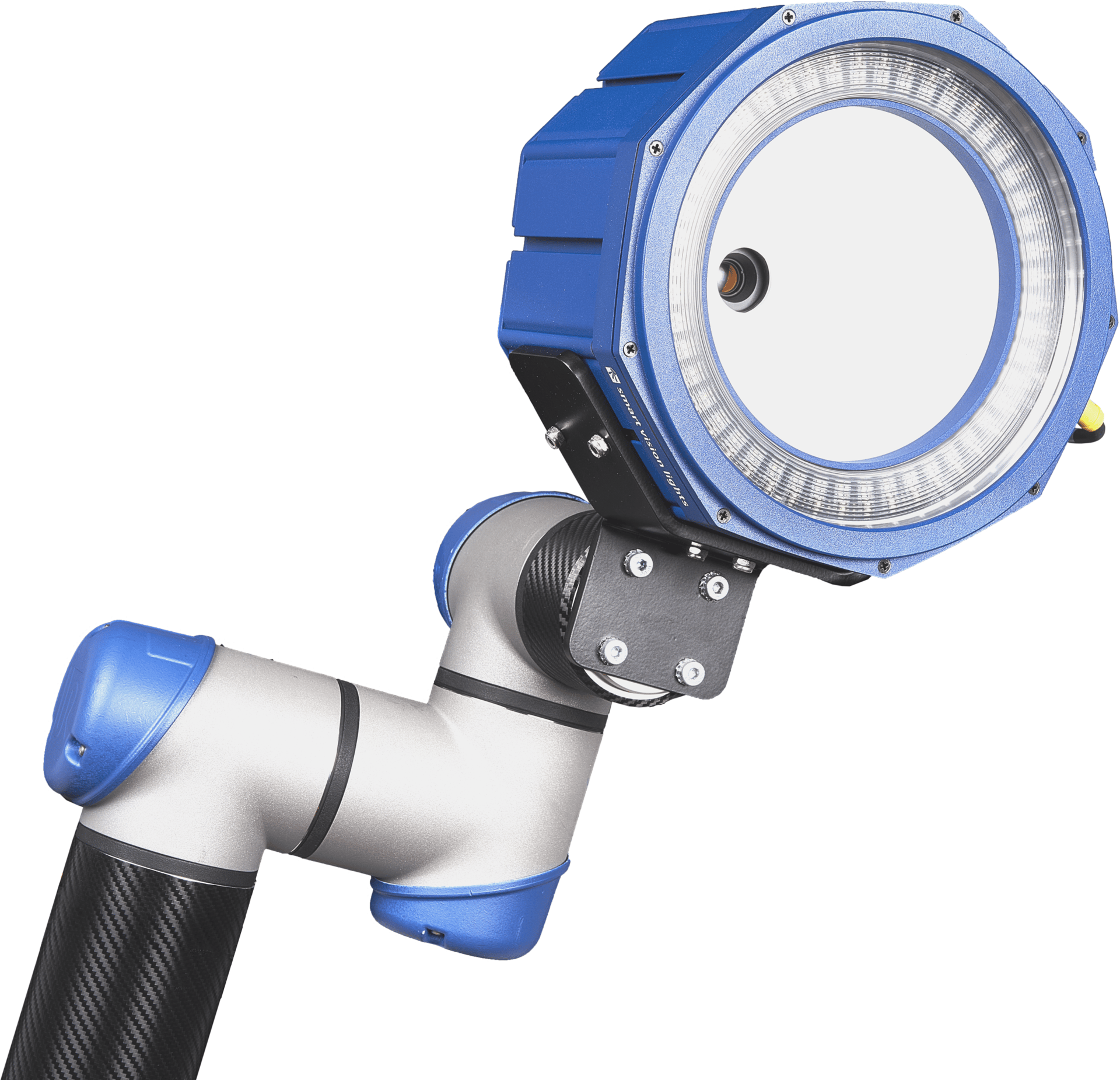

For any system that gives “eyes” to the robot, this involves integrating not only the robot, but also a camera, processing unit, machine vision software, and — when a camera’s integrated illumination won’t suffice — external lighting. In applications such as automotive manufacturing, where the cobot must maneuver around or inside the vehicle to make various inspections, a camera can mount directly to a light that attaches to the end of the robot’s arm.

While size and space constraints present issues, integrators have options, such as deploying a compact, single multi-functional unit that combines a dome light, low-angle dark field ring light, mid-angle dark field ring light, RGBW ring light, four-quadrant ring light, and an NIR ring light. These units also feature a sequencing controller, four-zone multi-drive LED driver, and cables, delivering advanced control capabilities for complex assembly and inspection tasks, such as inspecting different sections of a large part. Deploying such a light even allows the robot’s end-of-arm-tooling to deliver photometric stereo implementations for tasks such as reading and verifying codes on a tire.

Collaborative robots requiring flexibility in terms of movement and space can deploy a camera mounted directly to a multifunctional LED light at the end of a cobot arm. Source: Smart Vision Lights

Does Lighting Matter in Deep Learning?

While perhaps the most overhyped of the technologies covered here, deep learning has found a niche in the industrial automation space. Instead of being the “machine vision replacement” it was initially lauded as seven or so years ago, the AI subset has proved quite valuable as a tool that augments rules-based machine vision software and systems. Examples include leveraging deep learning algorithms for optical character recognition (OCR) applications — which removes the need for extensive font training and makes deployment significantly easier — as well as defect detection in electronics and semiconductor inspection, where deep learning can better accommodate the wide range of potential defects that may be present on a wafer.

Successfully implementing a machine vision system — whether traditional or deep learning — involves applying and executing a competent and thorough workflow of the technology. With deep learning, this means starting with an application analysis and developing an imaging solution that acquires suitable, high-quality images to create a quality data set for training and labeling.

While perhaps cliché, the adage of “garbage in, garbage out” applies in deep learning as much as anywhere. Starting with clean, labeled data lays the groundwork for success while low-quality data (images) will produce an ineffective model, leading to lost time, effort, and costs. As part of the process for acquiring high-quality images, lighting remains an indispensable part of that process. While deep learning algorithms can be somewhat forgiving of less than favorable lighting conditions, the trending concept that lighting does not matter in deep learning is simply not true.

A recent case study analyzed a deep learning-based machine vision system that inspected parts with both good and bad lighting, with the objective of finding defects and measuring the performance of each configuration. Used for the training set were 35 images of undamaged (good) parts and 35 images of damaged (bad) parts. The network with good lighting produced overall accuracy of 95.71% with an average inference time of 43.21 ms, while the bad lighting network had an overall accuracy of 82.86% with an average inference time of 43 ms. Despite the popular myth that says otherwise, deep learning systems require high-quality lighting.

Creating a high-quality data set means acquiring high-quality images, and acquiring these images requires sufficient contrast — which high-quality lighting helps deliver. In some cases, deep learning systems may require specialized lighting implementations, since certain quality control applications often require multiple inspections on a single product, which requires different lighting setups for tasks such as checking shape, size, orientation, and presence/absence. Deploying a lighting solution that covers multiple wavelengths and lighting types can provide systems integrators with additional flexibility when it comes to capabilities, space, and cost.

Lead With Lighting

Machine vision and industrial automation systems of all types continue to evolve, thanks in part to the advancements made in the technologies and components that comprise them. Lighting represents one of the best examples. Unfortunately, in some systems, lighting might be the last element that is specified, developed, or funded — if it’s addressed at all. Today, with all of the vision-specific and application-specific lighting options available, lighting can help optimize nearly every aspect of a machine vision system.