Systems Integration

Systems Integration

Vision & Sensors

V&S

An introduction to when and how to incorporate deep learning in industrial automation applications.

By David L. Dechow

Machine Vision Systems Integration:

Deep Learning

Deep learning has become a useful tool in the integration and implementation of machine vision systems in the automation environment. Deep learning provides value in certain industrial inspection applications including tasks such as defect detection and assembly verification, especially when features to be detected require subjective decisions like those that might be made by human visual inspection. Many of these applications also might benefit further from a solution that uses both analytical (“traditional”) machine vision algorithms along with deep learning.

In all cases though, the competent systems integration of components and software - from application analysis to design and implementation to final validation - remains critical to the reliability and long-term success of every solution.

AI, deep learning, and machine vision

Deep learning in image recognition and classification is a relatively new (since about 2010), specific “artificial intelligence” (AI) technique for implementing machine learning (ML) in a variety of applications including vision.

Historically, this technology evolved from the bigger concept of AI, which has been a focus of scientists since even before the era of digital computing. Early research into “computer vision” – making computers “see” and comprehend images in a way similar to humans – introduced many of the image processing and analysis algorithms that became part of the specific engineering discipline that has for decades been known as “machine vision” within the automation marketplace.

The so-called “traditional” machine vision implementation techniques involve creating analytical “rules” about a target image that are executed using algorithms that return specific information about (or perhaps measurements of) the object or scene. On the other hand, deep learning software for machine vision does not externally use “discrete” or “rule-based” analysis (although internally much of the low-level analysis is similar). Instead, it “learns” to recognize objects and features through a data-centric training process where many representative example images are provided to the deep learning system.

Broadly used in computer vision for image recognition and classification in consumer tasks, deep learning leverages and expands upon early “neural network” concepts from AI and now is being applied successfully to a growing number of applications in industrial machine vision. But the successful design, configuration, and implementation of applications using deep learning calls for an integration approach somewhat different from that used in traditional machine vision projects.

Deep learning systems integration: an overview

Integration, of course, is the broad undertaking of “making the system work” for a targeted vision application. As with any automation project, using a formal integration process that details steps for planning, design, implementation, and deployment always helps ensure success. (The names for your integration process tasks might be different, but nonetheless it is important to have and follow a well-considered strategy.) For this discussion, our overview will focus on the design and execution elements of the integration process that are unique to the success of a deep learning application.

It is important to note that implementation of computer vision deep learning tools and techniques in industrial applications may require an expanded team and some skillsets different from those used in standard machine vision. It might be a prerequisite that a data scientist be included in what traditionally might have been an “engineering-discipline” team. Some deep learning software providers offer their inspection solutions as a long-term service (“software as a service” or “SasS”) to help ensure that the customer has the necessary access to deep learning technology experts to provide tuning and maintenance of the software.

While the general tasks (planning, design, implementation, deployment) remain the same, execution steps for deep learning systems integration differ from traditional machine vision. The key steps to be undertaken are:

Select an application suitable for deep learning: Undertake an application analysis to understand the needs of the system in the production setting and prepare a project specification that details the measurable outcomes that will define the success and value of the system and how those outcomes can be achieved.

Deploy a well-designed imaging system to perform data (image) acquisition and collection.

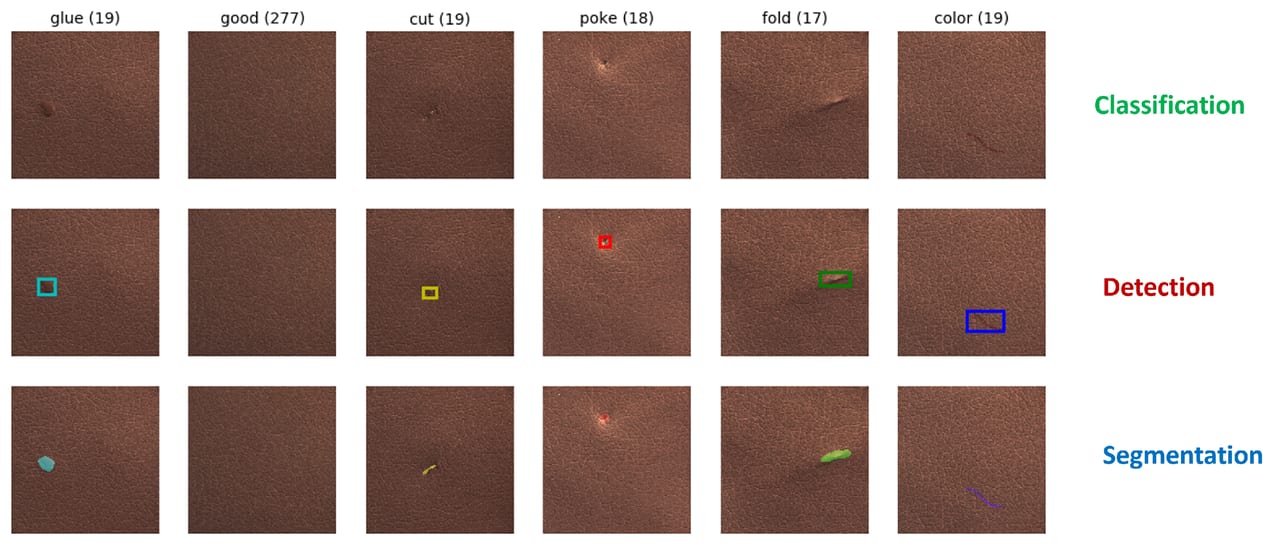

Collect representative images depicting the classes of defects or features that must be detected along with images that are “good” – the initial “dataset.”

Label some of the initial dataset for training (can involve visual evaluation and manual segmentation of features/defects in images by more than one human inspector).

Learn (train) the models and validate the results with test images from the original dataset.

Run the models and empirically test performance in production with respect to detection/classification capability, reliability, and/or other application metrics.

Re-learn as required with more data (images) (steps 3-6) to cover specific cases that didn’t succeed in production; tune the dataset(s)/labels, models and algorithms as needed to achieve required system performance.

If new classes (features/defects) are introduced, repeat steps 3-7.

Let’s take a brief look at a few of the steps and their importance to systems integration for deep learning in machine vision.

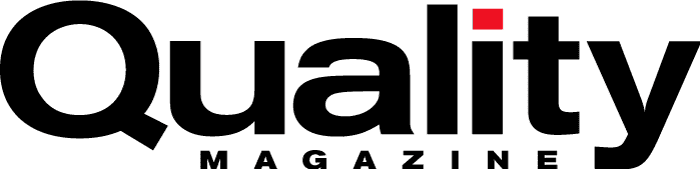

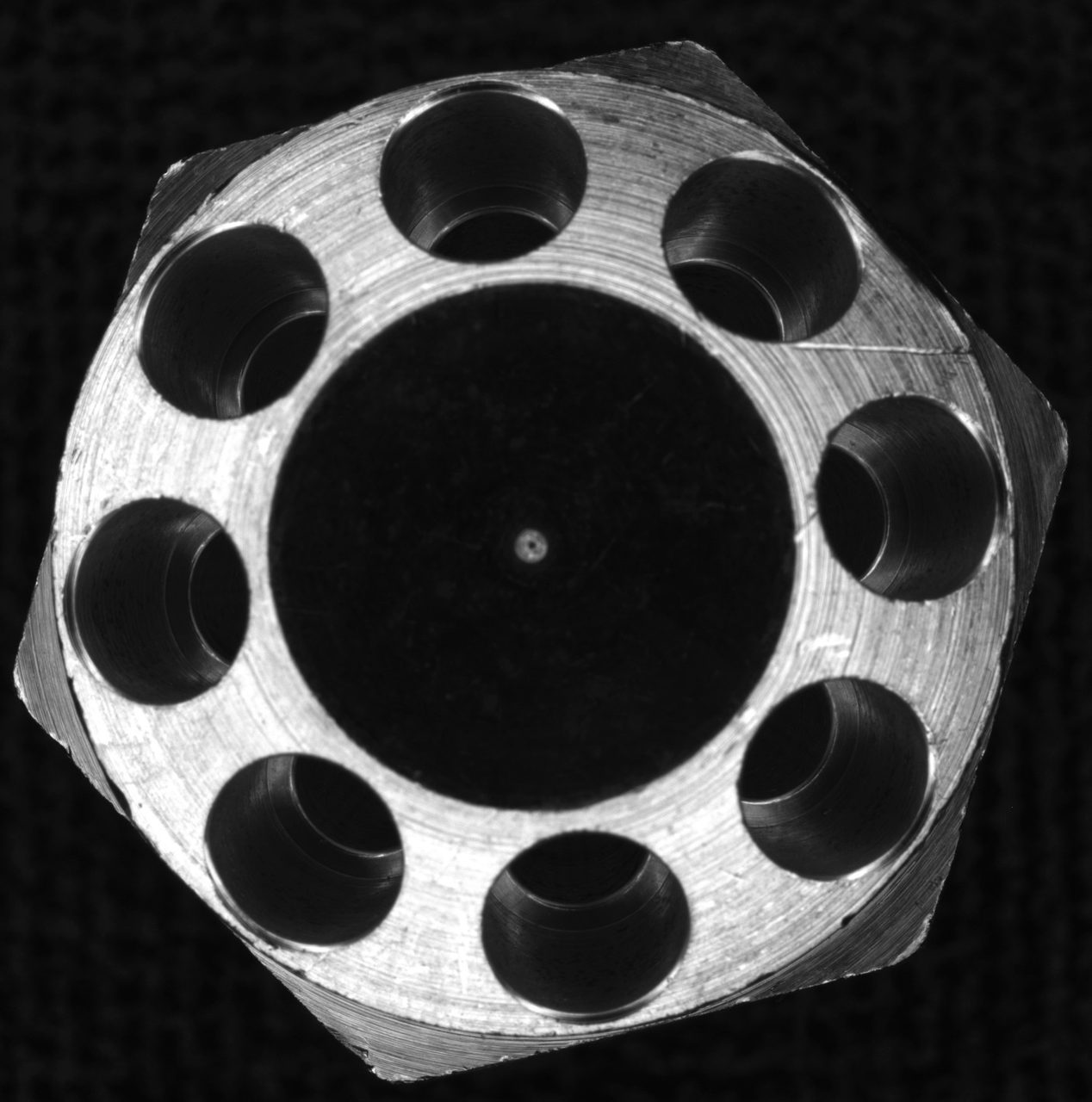

Imaging remains a key part of all machine vision applications. These two images of the same part show how correct optics and illumination must be used to highlight features of interest. Source: Integro Technologies

Applications suitable for deep learning

Some tasks that would be overly complex or virtually impossible with “rule-based” machine vision might be addressed successfully with, and could be good candidates for, deep learning. Likewise, if an application can be performed easily, reliably, and robustly using analytical machine vision tools and components, switching to deep learning might result in unnecessary complexity and even cost for the project and probably would not be appropriate.

Generally, deep learning does well in situations where subjective decisions need to be made like a human inspector, and particularly in situations where the identification of specific features in the scene is difficult due to complexity or perhaps variability of the image. In these cases, a good rule of thumb is that if a human could easily and quickly be trained on how to differentiate good parts from bad visually, deep learning likely could be suitable. Specifically though, deep learning is not a good choice for applications that require confirmation of physical metrics, in-line precision measurement or location of features, or identification of small variations in size and shape of objects.

Furthermore, it sometimes is difficult even for experts to predict in advance the level of long-term success that might be achieved in a deep learning application. In the industrial marketplace where often, a strong premium is placed on “return on investment” in an automation project, the inability to guarantee a level of performance in advance might be a limiting factor in selecting deep learning technology. This situation is compounded by the fact that deep learning implementations might sometimes yield somewhat low performance on initial startup, and then require weeks or months of additional data collection and tuning to achieve reliability typically expected in manufacturing (95-99%) in terms of false accept false reject rates. One key to success is to collect a statistically relevant number of images of the features to be trained very early in the process to develop the classifications and gain an initial estimate of the ultimate success of the training. Also, a hybrid technology implementation might be a solution in these cases, as we will discuss later.

This shows the key tasks performed in a deep learning implementation, and examples of what those

operations might look like using sample data. Source: Mariner USA

Image formation always is critical to success

Image formation requirements are the same for deep learning tools as for analytical machine vision tools. Simply put, the image to be processed must successfully show the features or defects that must be classified and inspected. It is reasonable to say that deep learning might be more tolerant of imaging variation and confusing scenes in some cases, but it cannot automatically produce reliable results in applications with unreliable imaging. If a human inspector labelling the dataset cannot easily “visualize” the features/defects in collected images, or if multiple inspectors cannot agree on the labelling it is a strong indication that the image formation is insufficient to achieve reliable results.

Proper camera selection along with knowledgeable and creative design of optics and illumination has long been a fundamental prerequisite for machine vision systems integration and many experts share the opinion that competent imaging contributes 85% or more to the success of an application. The bottom line is that if an object, feature, or defect is too small, too blurry, or is indistinguishable from the surrounding background then no processing technology or software approach will be reliable in detecting it. While a deep dive into image system design is beyond the scope of this discussion, the key point here is that the imaging technique remains a key part of integration even for deep learning applications.

Data reigns

In the general process of integration for deep learning implementations as noted above the collection of images to be used in training models takes the place of the algorithmic image analysis that is the hallmark of analytical machine vision techniques. In this case the image is data, and both the amount of and quality of the data directly impacts system success. Deep learning/AI experts might often work to improve a model by modifying and tuning code, but a different paradigm in deep learning is emerging driven by industry leader Andrew Ng (Coursera, Landing.ai) that promotes a “data-centric” emphasis rather than a “model-centric” emphasis. For those integrating industrial machine vision using deep learning the brief takeaway is that image quality is just as important as image quantity and the iterative process of labelling and learning will improve the system results.

Hybrid machine vision solutions merge analytical and deep learning tools

While deep learning in certain use cases can be a stand-alone solution, many applications will benefit from a hybrid implementation of both analytical and deep learning tools. For example, the iterative process of collecting and training these images to obtain guaranteed reliability of a system might require days, weeks, or in some cases months of image collection and continuing re-learning or tuning of the deep learning model(s). During this process, analytical machine vision tools could “bridge the gap” in many cases to allow the system to be functional in terms of detecting some level of non-conforming parts, and even automatically labelling the related images for subsequent use in a deep learning dataset. In this hybrid configuration, an inspection can be partially or even fully operational while enough images are collected to make the deep learning algorithms reliable.

Ultimately, the entire project might be most reliable and robust with a hybrid combination of both analytical and deep learning tools. One scenario would be to have the traditional machine vision toolset “pre-screen” features and quickly pass or fail obvious good and non-conforming parts. Images that are not confidently classified at that point could be further analyzed by deep learning to make a more subjective decision based on the learned parameters.

In conclusion, getting the most out of deep learning as a tool in the machine vision systems arsenal remains a function of a well-considered integration protocol. Hopefully this discussion has provided a useful baseline for success in your next application.

“Machine vision” or “computer vision”?

It might be evident that these two terms perhaps do not describe identical technology implementations, though sometimes they are used interchangeably. The result: potential lack of clarity and understanding when manufacturers and users discuss implementation of a particular product or system.

From a historical point of view, the term computer vision has been used since the early 1950s to describe a broad science related to AI. Machine vision is a term first used around 1970 generally in the context of an engineering technology (which coincidentally utilizes many of the algorithms that emerged from computer vision research and development).

Today, there are many ways one could differentiate the terms: based on technical or historical context, on imaging or processing concepts, or even for marketing purposes. Practically though, in observing common usage of the terms when talking about industrial automation, it seems to be the case that often “computer vision” is meant to describe an implementation of “deep learning” and machine vision means the use of “analytical,” discrete processes and algorithms. There is no real consensus though, and clarification could be needed if the terms are not obvious in your project discussions.

Opening Video Source: DKosig/Vetta via Getty Images.

David L. Dechow is the principal vision systems architect at Integro Technologies. For more information, email ddechow@integro-tech.com or visit http://www.integro-tech.com.

Scroll Down

Scroll Down