Machine Vision 101

Vision & Sensors

V&S

What are the main considerations to keep in mind? By Mike DeGrace

A Quick Guide to Choosing Machine Vision for

Collaborative Applications

H2 Deck By Bold Name

h2 xxxxxx

H1 xxxxxx

h2 xxxxx

The first industrial machine vision systems date back to the beginning of the modern industrial robot age in the 1980s. Today, augmenting collaborative robots (or cobots) with vision enables them to achieve with higher precision, greater flexibility, and more intelligence. Integration is not a one-size-fits-all process however, since the specific requirements of each application can vary greatly.

Consider an electronics assembly line, for example. A cobot working on printed circuit board (PCB) assembly could use machine vision for two (or more) tasks with high precision requirements, such as locating the position of components on a PCB and inspecting the quality of solder joints post-assembly.

Meanwhile, simple applications, such as detecting whether a product has a sticker indicating that it has passed inspection, can be performed by basic, 2D camera-based systems and at a lower price point.

The versatility of machine vision has seen the global machine vision market grow by 10.7% between 2021 and 2022 to reach a value of USD12 billion, according to MarketsandMarkets. The global market is projected to reach USD17.2 billion by 2027. Major factors driving the adoption of machine vision in collaborative automation include: the increasing affordability of machine vision hardware; usability enhancements; and the rise of artificial intelligence. These improvements work together to improve performance, make new cobot applications possible, and reduce the total cost of ownership (TCO) on machine vision projects.

What are the main considerations to keep in mind when choosing a machine vision system for a cobot-based application?

Universal Robots (UR) certifies numerous vision cameras to integrate seamlessly with UR cobots through the UR+ platform. At DCL Logistics, a 3PL fulfillment center in California, the company has deployed a UR10e cobot equipped with a Datalogic vision camera. Once a product is picked, the SKU is verified by the camera that sends a “pass” or “fail” command to the cobot. If the product picked is incorrect, the UR10e places the product in a reject bin.

1) Do You Need Machine Vision?

Are you sure that your application requires machine vision? Could it be performed using traditional sensors or fixturing? Some cobots have built-in user-friendly palletizing wizards that can easily pick up parts placed in a grid pattern on a peg board, which would not require vision at all. Similarly, simple sorting and detection applications that don’t require high precision could be carried out using traditional sensors.

All that said, many critical industrial tasks require some sort of machine vision system. These include applications involving object recognition, variable object locations, quality inspection tasks, and safety.

2) Location, Inspection, or Safety?

Before purchasing a cobot arm with built-in vision, ensure that it is the right vision solution for your application. Cobots that provide seamless integration with a wide range of vision solutions from entry-level to advanced are in real demand, because broad compatibility provides more flexibility and helps to futureproof your initial cobot investment.

The vast majority of vision-based applications fall into one of three subdomains: location (including path planning), inspection, and safety. For part location applications, the vision system has to be capable of accurate object recognition and pose estimation.

For quality inspection roles, the system should be able to detect extremely minute defects. This requires high-resolution cameras and advanced image processing software.

For those all-important safety applications, such as detecting when a human has approached and/or entered the cobot’s workspace, you’ll need a machine vision system with real-time processing capabilities, tracking functionality, and robust object detection.

Universal Robots has an expansive UR+ ecosystem of partners developing vision solutions that integrate seamlessly with the UR cobot arms. Combining UR+ partner Photoneo's 3D Meshing with MotionCam3D color mounted on an UR cobot allows for fast 3D object model creation for manufacturing validation, equality control and further processing.

3) Lighting

Lighting has a major impact on image quality and the performance of vision systems. Some vision systems require consistent, high-contrast lighting conditions. Some machine vision systems come with illumination capabilities to address these issues.

Other machine vision solutions are easily able to cope with variable lighting conditions, so that changes in ambient light levels over the course of a day will have no impact on performance. Even a change as small as switching from fluorescent to LED bulb use can cause some vision systems to fail, so make sure to explore this topic with your integration team.

4) 2D or 3D camera?

2D robotic vision is highly effective at handling a wide range of non-complex applications. Typical examples include barcode reading, label orientation and printing verification. That said, 2D cameras only provide length and width information – not depth – which dramatically limits the number of applications they can handle.

At the other end of the spectrum, 3D cameras provide extremely accurate depth and rotational information, making them a good fit for applications that demand detailed information about an object’s precise location, size, surface angles, volume, degrees of flatness, and other features. Advanced inspection applications that need to be able to detect minute defects in a product’s finish will perform best using 3D camera systems.

5) Precision Requirements

Closely examine the accuracy, repeatability, and tolerance requirements of the application before you select a machine vision system for your cobot.

For example, applications like microchip manufacturing and precision assembly require advanced vision systems with high-resolution cameras and complex image processing capabilities. Applications with more lenient tolerances can be performed by cheaper vision systems.

6) Cycle Time

The processing speed of machine vision systems needs to align with the cycle time of the cobot operation. High-speed applications require systems with fast image capture and processing capabilities – but keep in mind that processing all this visual information takes time, which will impact performance and will need to be factored into your cycle time considerations.

Radio frequency-based systems:

A new alternative to machine vision

Radio frequency-based systems: A new alternative to machine vision

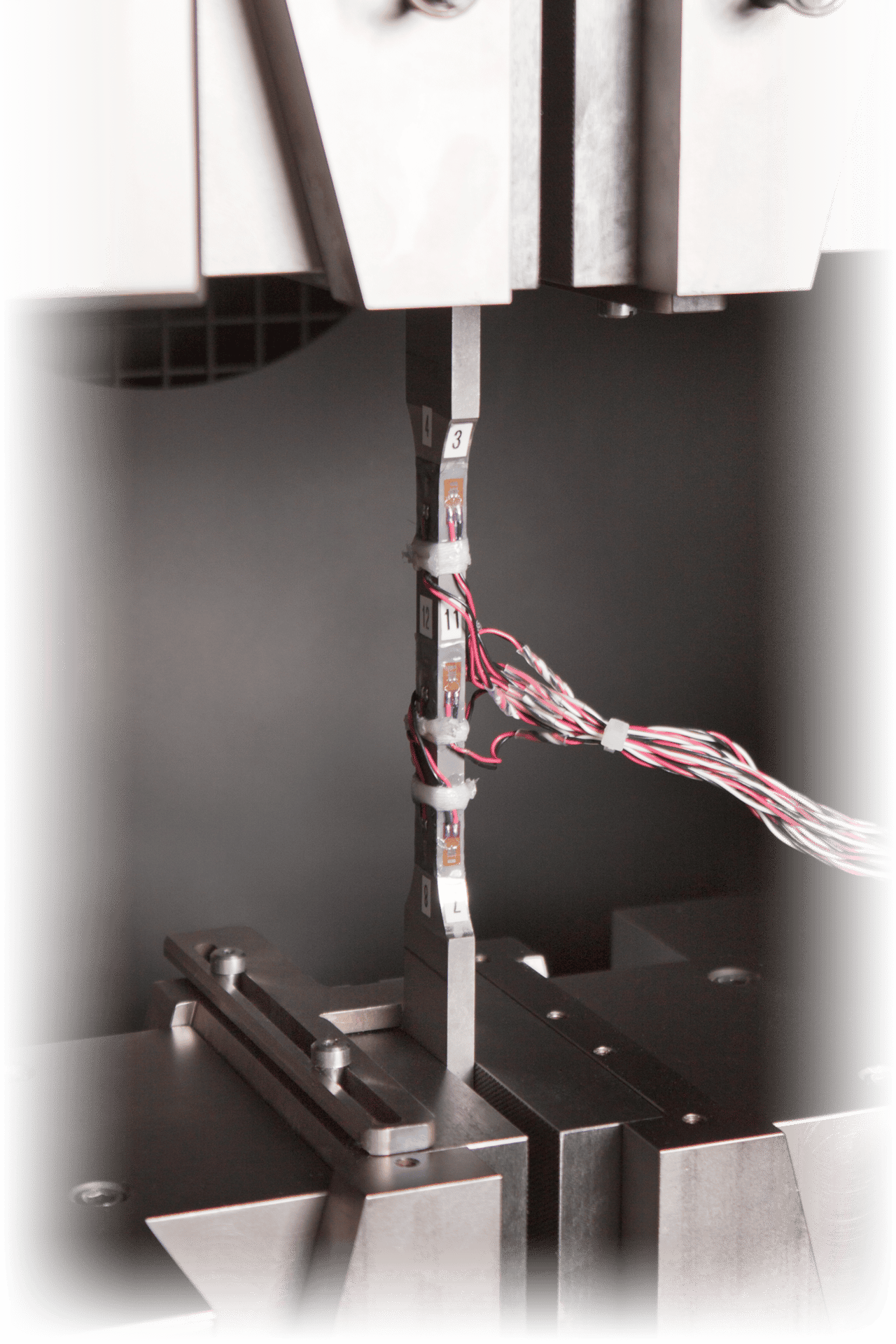

There are noteworthy alternatives to vision-based systems, including those that use radio frequencies –rather than light—to provide high precision microlocation and sub-millimeter precise path planning for cobot arms in real-time. They achieve levels of precision traditionally associated with the most advanced 3D systems.

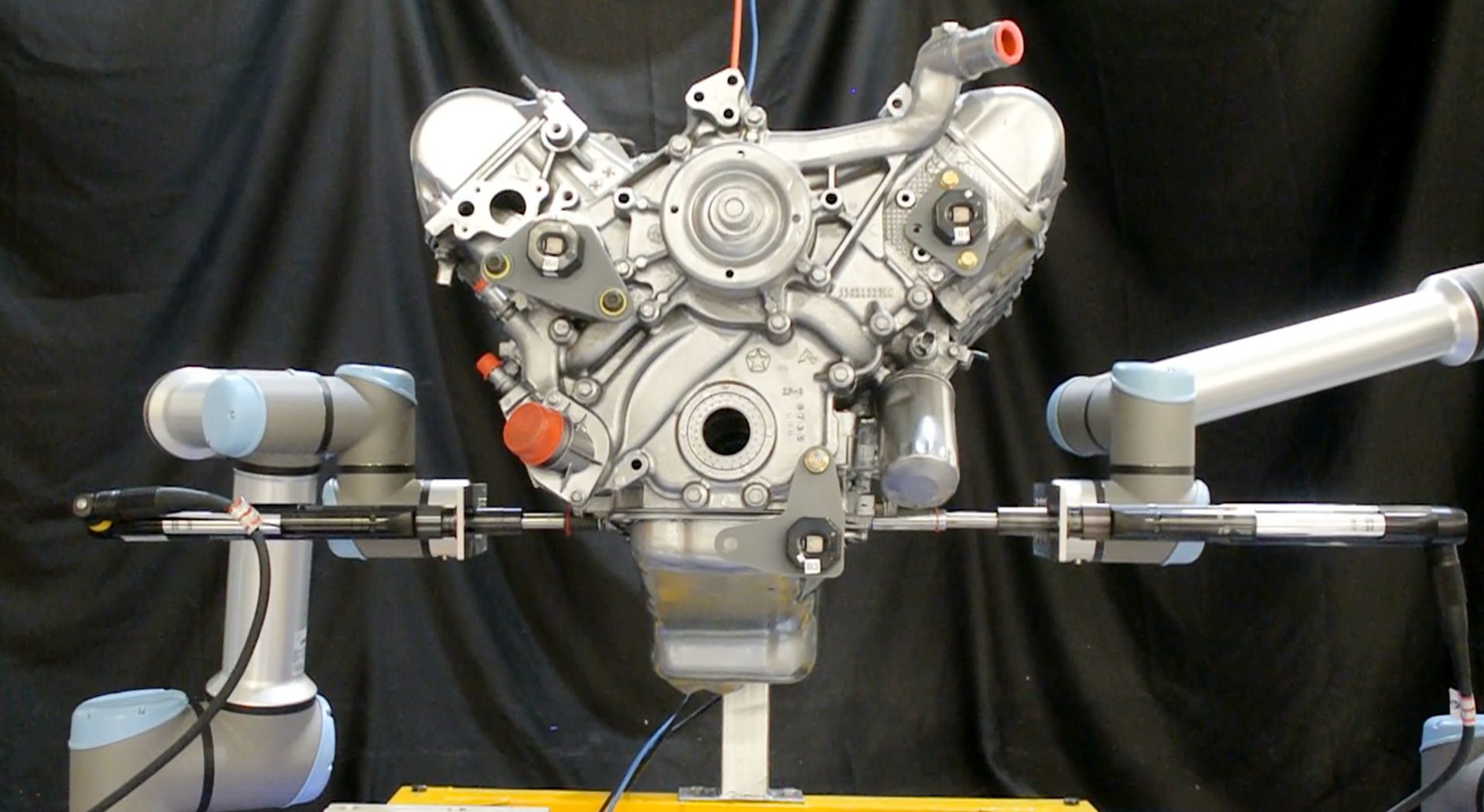

One such system, specially developed for the automotive sector but with applications across numerous verticals is being developed by Humatics. It enables extremely advanced applications, such as having two cobots work concurrently on an engine as it moves on a conveyor.

Radio frequency-based systems can outperform vision on some tasks, says Ron Senior, vice president of sales, marketing and business development at Humatics.

“The ability to process data in complex environments, such as an engine in motion, is well suited to the lightweight, highly rapid positional data that radio frequency provides. It’s very difficult to achieve the same performance with machine vision because of the image processing required,” he explains.